PREMUS 2025: 12th International Scientific Conference on the Prevention of Work-Related Musculoskeletal Disorders

PREMUS 2025: 12th International Scientific Conference on the Prevention of Work-Related Musculoskeletal Disorders

Concurrent validity of automated computer vision-based ergonomic risk assessments using the revised NIOSH lifting equation

Text

Introduction: Recent advancements in computer vision-based technologies have enabled our abilities to collect estimated 3D body/joint position data using single handheld cameras. These data are subsequently being used to determine inputs into various ergonomics assessment tools (i.e., Revised NIOSH Lifting Equation (RNLE)) to quickly perform ergonomic risk assessments in the field. However, the accuracy of various input variables (i.e., hand locations, vertical displacement, asymmetry angle) determined from the single-camera computer-vision based technology has yet to be validated against lab-grade kinematic data. Therefore, the purpose of this work was to compare input variables and outcome metrics determined from both single-camera and lab-grade multi-camera pose estimated data to establish the validity of automated ergonomic manual material handling assessments using a single-camera computer vision-based software.

Methods: Forty participants completed a floor-to-shoulder height lifting task under fast- and slow-paced conditions. Participants’ body motion was captured using eight synchronized 2D video cameras oriented in ~45° increments around the participant. Video data from all eight cameras were processed through Theia Markerless software (Theia Markerless Inc. Kingston, ON, Canada) to produce validated lab-grade 3D human pose estimated kinematic data. Videos from each individual camera angle were additionally processed through Inseer’s (Inseer Inc, Iowa City, IA, USA) single camera computer vision-based pose estimation model. Key input variables (i.e., horizontal/vertical hand locations, vertical displacement, asymmetry angles) and output metrics (i.e., recommended weight limits (RWLs)) from the RNLE were determined from both the lab-grade and single camera computer vision pose estimated data. Concurrent validity between pose estimation methods was assessed via Bland-Altman analyses.

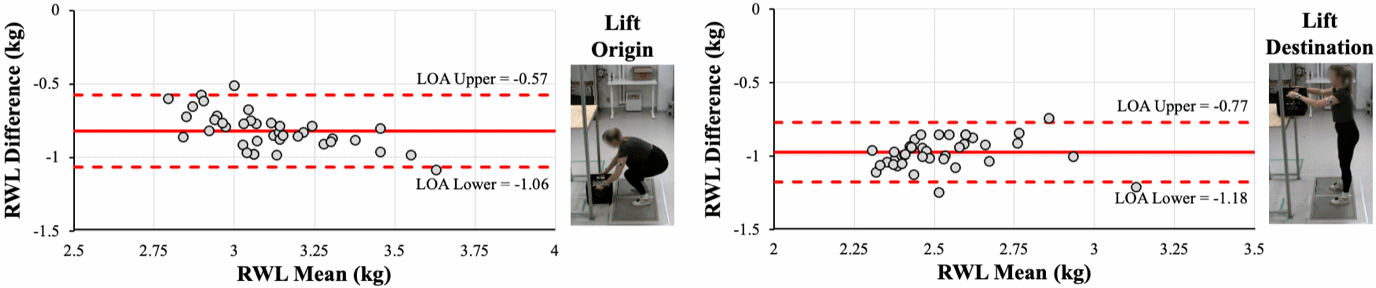

Results: Overall, RWLs were less than 1 kg different across most camera angles (e.g., Figure 1 [Fig. 1]), with left and right camera views (i.e., sagittal view) resulting in the smallest limit of agreement ranges (between 0.4–0.5 kg) away from the mean difference. Attributing to the <1 kg differences, the single camera computer vision-based data overestimated the vertical travel distance and asymmetry angles and underestimated hand locations at both the lift origin (i.e., floor) and destination (i.e., shoulder height shelf) across all camera angles.

Figure 1: Bland-Altman plots of NIOSH recommended weight limits (RWL) determined from pose estimated data captured from the left sagittal view during the fast-paced

Discussion: NIOSH RWLs were within 1kg with narrow limits of agreement for this controlled sagittal lifting task. These results provide initial concurrent validation data to inform the use of single camera pose estimation data to perform automated NIOSH lifting risk assessments. However, these findings may be limited to the specific pose estimation approach used in this study, and may not be generalizable to other pose estimate models. Analysis over a wider range of origin and destination locations will help further extend our understanding of concurrent validity for pose-estimation based automated RNLE analysis.